Version your proto definitions for stability.

EngenhariaIt's easy to forget updating a protocol buffer file and disrupt a server call if the specification doesn't align especially with microservices. If your server-to-server doesn't match the proto definition to a T, the request will fail. Therefore, it is important to set up a scalable model pipeline throughout your application development.

How to structure protobuf files?

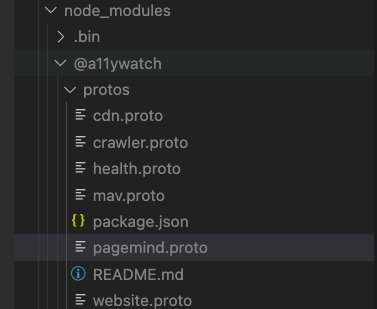

Depending on the requirements setting up your proto definitions can vary from company to company or app to app. The gRPC protocol was designed so that it can use protocol buffers or .proto files to implement a schema that can generate a server and client that can send messages following the formats defined. At first you might setup a .proto file in two locations across two different Github repos or folders that do not share common modules and get the lego blocks to connect. At some point you may end with multiple service definitions and messages that are duplicated across the repos. With gRPC you can import the modules that you need across files to prevent code from being duplicated. On that note it might help to setup one central location to scaffold out the files and ship to the dependency managers to consume inside your app like npm, cargo, or composer. An example of this can be can be found at this location and tested using npm ( the node package manager ) by running npm i @a11ywatch/protos. You will see the definition files inside the node_module.

Versioning benefits following semver.

When you follow semver (version control ) with gRPC it helps define a compatibility layer that will be true at various levels. For the most part gRPC is non breaking across updates, except when you do the following.

Binary breaking changes

The following changes are non-breaking at a gRPC protocol level, but the client needs to be updated if it upgrades to the latest .proto contract or client gRPC builder. Binary compatibility is important if you plan to publish a gRPC library across package managers. Removing a field - Values from a removed field are deserialized to a message's unknown fields. This isn't a gRPC protocol breaking change, but the client needs to be updated if it upgrades to the latest contract. It's important that a removed field number isn't accidentally reused in the future. To ensure this doesn't happen, specify deleted field numbers and names on the message using Protobuf's reserved keyword. Renaming a message - Message names aren't typically sent on the network, so this isn't a gRPC protocol breaking change. The client will need to be updated if it upgrades to the latest contract. One situation where message names are sent on the network is with Any fields, when the message name is used to identify the message type. Nesting or un-nesting a message - Message types can be nested. Nesting or un-nesting a message changes its message name. Changing how a message type is nested has the same impact on compatibility as renaming. Changing namespace - Changing namespace will change the namespace of generated language types. This isn't a gRPC protocol breaking change, but the client needs to be updated if it upgrades to the latest contract.

Doing cool things with our central versioning system.

Now that we have the important stuff down we can take advantage of the automated tools that help improve gRPC workflows and productivity.

References and resources:

Postagens relacionadas

Mantenha-se inclusivo com confiança

Comece agora com a A11yWatch pela acessibilidade web automatizada, eficiente e acessível.